Analyzing and improving software performance using Six Sigma tools and techniques.

An examination of Six Sigma tools, taken from the Analyze and Improve DMAIC project phases.

Introduction.The Six Sigma for load testing article gives an overview of mapping the DMAIC project phases to a load testing project. This article outlines specific tools and techniques, from Six Sigma, that are applicable to load testing project goals. |

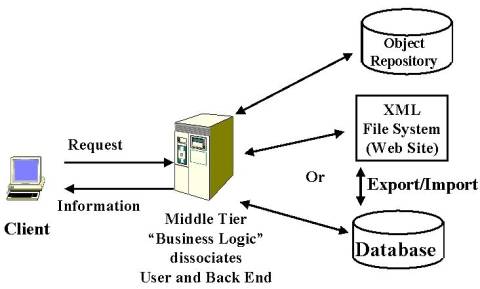

The Analyze phase of Six Sigma DMAICThere are two perspectives to use as reference points for conducting the initial analysis for a load testing DMAIC Six Sigma project. The first is process workflow and the second is resources (CPU, Memory, Database, Network etc.). In practice both will be used to build the complete picture and identify the root cause of the issue. The initial resource measurements, taken during the Measurement phase, will typically be for the transaction as a whole. These initial measures include the total time it takes for the transaction (i.e. Place order) to complete. Following this high level view of resource consumption for the whole process, what follows is a breaking down of the process into a detailed workflow in order to isolate the component(s) that is causing the bottleneck or other performance issue. In order to analyze any process that process needs to be mapped out into a basic workflow or series of steps that satisfy the goals of the process. The initial workflow diagram will contain the main systems components, such as the example above. The initial process map is often called level 0 and each main process can be broken down into its own process map at increasing levels of detail (level 1, level 2 etc.). At each level of detail (including level 0) the important point is to be able to measure the resources used, including elapsed time, at that component level. By way of example the Database component could be decomposed into a series of SQL statements at process map level 1. If this decomposition is done the individual SQL statement resource usage and timings need to be measured for analysis. As can be seen this approach of mapping out, decomposing and measuring the workflow tasks aligns to a ‘top down’ or divide and conquer approach to homing in on the offending component. It is useful (although not essential) to have a load testing tool that can generate traffic against various system components, as the above analyze approach is followed. In this way just the SQL traffic could be generated to characterize the database access performance. This capability, from the load testing tool, will not only allow you to scrutinize the individual components but will also assist you with the next phase (Improve) as alternatives are tested. |

The Improve phase of Six Sigma DMAIC.Design of experiments is a statistical approach to identifying root cause, or optimal performance. There are many good papers on the internet on this subject but for the purposes of this article I will focus on Hypothesis testing. In summary hypothesis testing means forming an idea of the cause of an issue (or an idea for improvement) and then setting up experiments to prove or disprove that idea (i.e. the validity of the hypothesis). In practice hypothesis testing involves isolating the factor (for example your hypothesis is that when the Delivery date is into next year, on the Place Order transaction, there is a data access issue with multiple SQLs being invoked ) and then running performance tests varying just the date. In this way the date can be confirmed or eliminated as the ‘culprit’. If the delivery date proved to be the cause of the performance issue then, following countermeasures to improve the performance, (possible query optimization) other hypothesis testing could be done to verify the countermeasure. One set of important design of experiments to execute, after the system has been changed, is to verify that other transactions have not been adversely impacted with changes (in particular with database optimizations). This scenario is a ‘rob Peter to pay Paul’ deal under which any database transaction can be improved by redesigning the database around the given transaction but other transactions will suffer. In summary hypothesis testing will play an important role in analyzing root cause and proving that the system changes satisfy the load testing project goals without adversely impacting other system functions. |

Summary. |