Implementing the appropriate software load testing workload.

Given an appropriate (for the project) software load testing workload specification the next step is to:-.

Create the load testing scripts that meet the workload specifications.

Reproducing the defined workload.Given a specification of What workload is required, to satisfy the load testing project objectives, the workload behavior needs to be implemented in a load testing tool. Mapping the workload requirements to a given tool will vary depending on the load testing tool’s architecture. Here are some general workload characteristics accompanied with a discussion as to how various load testing tools implement the given characteristic. These workload characteristics have been selected by way of giving examples of the type of issues, tradeoffs and potential solutions encountered when implementing workload requirements in a given load testing tool. |

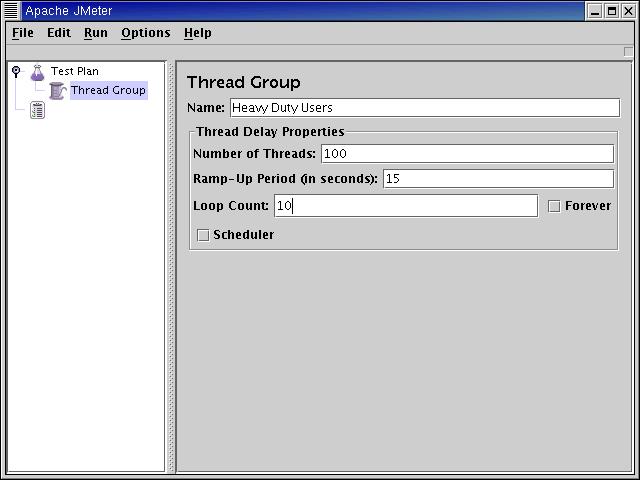

User equivalentsTypically User Equivalents are implemented as concurrently running ‘threads’ within the load testing program. Jmeter, by way of example, has a Thread attribute and the number of threads will approximate the number of required User equivalents. |

Requests per Second (rate)Within the threaded model (i.e. Jmeter), where a thread simulates a User equivalent, it is common to control the requests per second by using a wait within the load testing script. The wait effectively governs the rate at which requests are being made to the software under test. In order to increase the requests per second beyond the time it takes for the server to respond to a single request, multiple concurrent User Equivalents (threads) are executed. Consider an example workload definition of a population of 100 concurrent Users placing orders at an overall rate of 10 requests per second. In order to achieve the 10 requests per second rate for the population of Users, each user would need to wait for approximately 10 seconds. The calculation is individual rate (0.1 requests per second) * Number of concurrent users (100) gives 0.1 * 100 = 10 requests per second for the population of users. As can be seen achieving a higher rate requires executing more concurrent users or reducing the individual wait times within the scripts. A question that often comes up, regarding the relationship between concurrent users (threads) and requests per second is:- I want to simulate 1,000 users each with an average wait of 10 seconds to achieve an overall request per second of 100 (0.1 * 1,000). Can I use 500 users with a wait of just 5 seconds to achieve the same rate (0.05 * 500) = 100 requests per second. The answer to these types of questions is always it depends. There are specific resource usage issues that will be encountered with more users, including cached data, security checks, TCP connections etc. If the user population is specified in the workload requirements then this number should be implemented in the workload implementation for the given load testing tool. |

Web Users connection strategy

Each load testing tool will have its own implementation for handling embedded references. Some may reuse the original TCP connection and retrieve these resources serially rather than in parallel on concurrent TCP connections. Other load testing tools might try and replicate the Web Browser’s connection strategy for handling these secondary resources, by opening additional TCP connections for each Virtual User. The potential extra TCP connections utilized for the additional embedded references will consume additional resident memory and use the TCP pool from the server. The use of these additional resources might or might not be material to the objectives of the given load test. In any event the Web connection strategy of the given load testing tool should be understood by the test architect so that the workload requirements can be implemented as specified. In the case where the tool does not support opening multiple TCP connections during the retrieval of embedded references, supplementary load could be placed on the server (in parallel) to simulate these extra TCP connections and url retrievals. |

Summary |