Measuring software performance within a Six Sigma load testing project.

Measurement is at the heart of both Six Sigma and software performance testing.

In God we trust, all others must bring data - W. Edwards Deming.

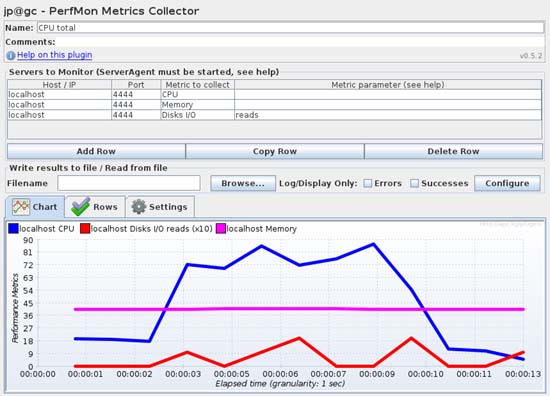

Within Six Sigma the M in DMAIC stands for Measurement and good data together with accurate measurements are essential for a successful Six Sigma project.Using a Six Sigma approach the project plan would lay out What was to be measured along with How this measurement would be taken. The calculations or charts where the measurements are to be used would also be specified and cross reference the project plan. In Six Sigma manufacturing projects, the measurement system is tested along with verification (calibration) of the various gauges and tools that will take the measurements. In software load testing projects we can do a similar exercise of calabrating our measurement tools. Take CPU and Memory as standard measures that might be taken during a stability test of an individual API call. The objective of such a test would be to verify, possibly during development, that the resource usage for a given API was indeed stable without memory leaks and without excessive CPU usage. For such tests it important that methods (and tools) for measuring individual code fragment’s usage of CPU and memory are identified and are known to be reliable. Such measuring software tools should be verified on known pieces of code (where the memory usage and CPU usage can be varied) so that the overall accuracy of the tool can be determined and compared with other tools running the same tests. Another consideration for selecting appropriate measuring software is granularity or the level of detail of the measurements themselves. Measuring memory in bytes or CPU usage to 0.01 of a percent might or might not always be appropriate but if this level of detail is required then the tool should be capable of accurately measuring this level of detail. |

What are the typical measurements for a software load testing project?System response time User, or system, response time (this is often called a user perceived measure) is a measure that gives an idea of the impact of system performance. It is typical that the response time will be a performance requirement (as a non-functional specification) but on its own it will tell us little as to where the issues (if any) exist within the system. CPU usage As a resource the CPU is critical to all data processing and this resource should be measured and monitored during all load tests. The CPU can become a ‘bottleneck’ when in high demand (as the CPU queue builds) and individual processes (programs) could take more than their fair share of this valuable resource. CPU usage and queuing patterns should be one of the first performance measures taken during a load testing project. Memory usage As with CPU the memory usage will be critical to the overall performance of the software under test. For initial stability performance testing, detecting memory leaks will be a major project focus. For data access, caching hit measurements (whether or not the data is retrieved from disk or memory cache) will be essential for making decisions as to the size of the data cache and what items should or should not be cached. In tandem with measuring cache usage the Disk IO is also important for building up a profile of the software under test’s data access demands. Data access, usually via SQL, is typically the cause of the longest delays in processing a given transaction. Disk IO Disk IO should be measured and (where appropriate) SQL profiling of transactions should accompany this measure. For a given data processing transaction the time taken to access the actual data will be a major proportion of the overall response time that the user experiences. Many counter measures (improvements) to performance issues are related to data reorganization, SQL optimization and\or data caching. Network Whether the data is travelling over the LAN or WAN the delays in getting from point A to point B should be measured. In addition the volume of data traffic should also be measured as this is positively correlated to the overall time it takes for the network to the deliver the traffic (the more data the longer the time taken). Understanding, via measurement, the behavior of the network traffic for a given application will not only characterize the overall resource usage but also shed light on potential improvements such as data duplication or relocating the data closer to where it is being processed (i.e. moving calculations to SQL procedures). Queuing It is important to not only measure the resource usage (be it CPU, Memory, Disk Controller or Network) for various performance testing scenarios but to also measure the queue time for each of these resources. The time a transaction spends waiting for a resource will typically exceed the time the transaction spends using the resource. Measuring the various resource queues as workload increases will give a good indication as to the where the bottlenecks are and where improvements could be made. By way of example take CPU usage which includes the actual CPU utilization (as a percentage of capacity) and the processor queue length, which is the number of processes waiting to utilize the CPU. A high CPU utilization (80 %+) is not, on its own, an indication that the CPU is overloaded. It is the combination of high CPU utilization together with a lengthy processor queue that would indicate that the CPU was indeed overloaded. Reducing wait times (for resources) is a common strategy for improving the overall performance of a system. |

SummaryMaking appropriate and accurate measurements is essential to any Six Sigma based project, including load testing. Identifying What needs to be measured together with How best to take the measurement should be a part of the project planning phase. From a user perspective the overall response time is the prime indicator of a system’s performance capability. All measurements taken, including their granularity, should be appropriately aligned to the overall goals of the performance project. Typical performance measures include: Overall system response time, CPU, Memory (including cache), Disk IO and Network traffic. It is not only important to measure the resource utilization but to also measure and understand the queues (waits) for the given resources. |